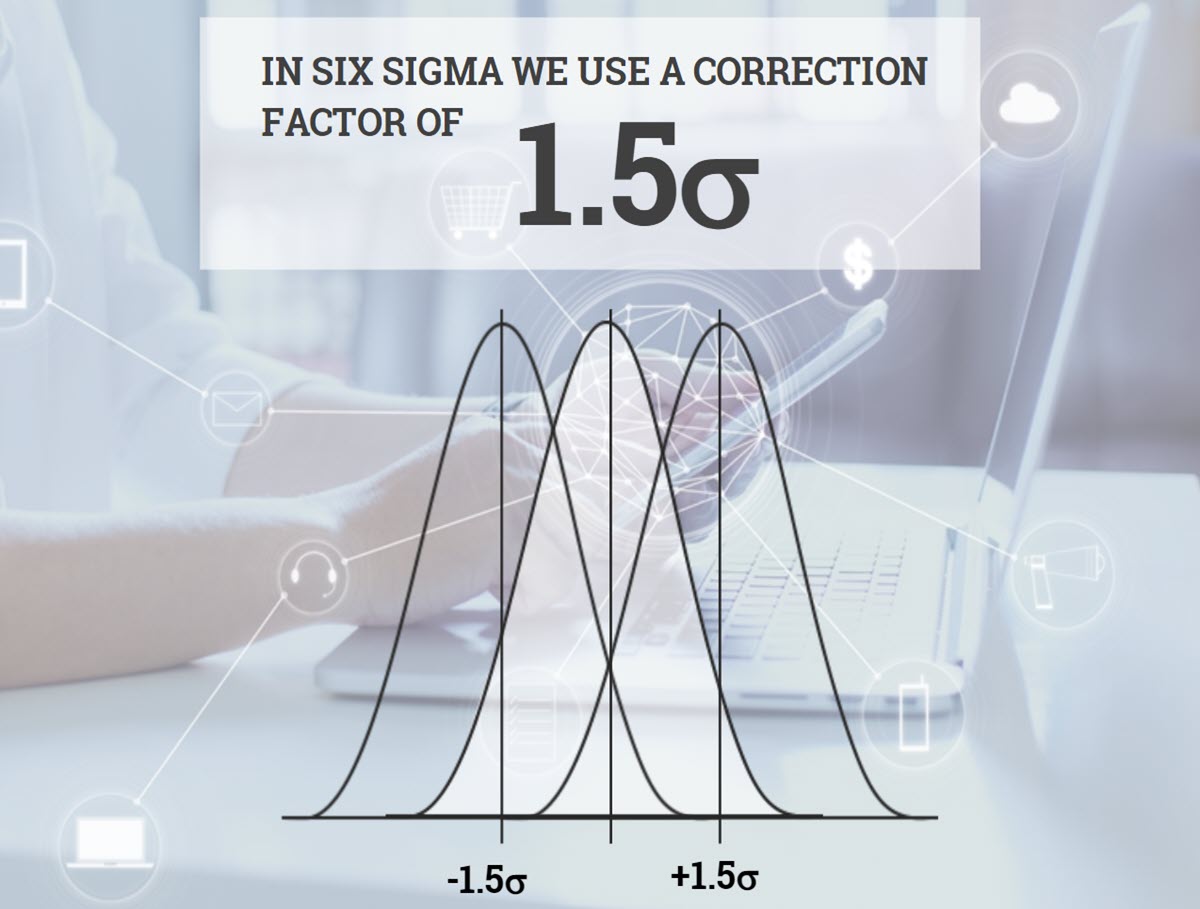

One of Six Sigma’s most hotly debated features is the infamous 1.5-sigma shift. Here’s how it works. In Six Sigma we assume that the customer’s actual experience will be worse than what we predict during capability analysis. We add a “fudge factor” that assumes that the process will shift by approximately 1.5 standard deviations. I’ve learned through experience that the derivation of the word assume is “ass u me”. As a rule I don’t encourage using assumptions and I’d like to go on record as officially advocating that we get rid of this one.

Let me say that the assumption that the customer’s experience will be worse that the capability analysis prediction is very likely correct. A process capability analysis is, by design, rigged to produce the best possible results. We take extreme precautions to control every known source of variation. We set up the process with experts. We perform a rigorous measurement systems analysis and use freshly calibrated gauges and test equipment. We limit our source materials to a single, qualified supplier. We plot the data on control charts and remove special causes of variation as they appear.

When we’re done we have a process that’s as close to perfect as we can make it. Will things go downhill from there? Of course! By precisely 1.5-sigma? Probably not. In a Six Sigma organization the process should improve over time. Should we estimate the amount of the improvement and factor that into our long-term forecasts. Maybe. Maybe not. The value added by forecasting the future is questionable at best. And what is the 1.5-sigma shift if not a forecast adjustment?

The originators of Six Sigma took a stab at “guestimating” the amount of deterioration in performance between the process and the customer. I tip my hat to them; it was worth a try. But after 30+ years of use the 1.5-sigma shift still distracts people from more valuable contributions of Six Sigma. The DMAIC framework, for example. Another is the leader-driven project selection approach that I’ve seen used to make improvements thousands of times. By removing this red herring maybe we can focus on what’s truly important.

What are your thoughts? I’m truly interested. Please comment below.

Leave a Reply