Your cart is currently empty!

It’s Time To Ditch the 1.5-Sigma Shift

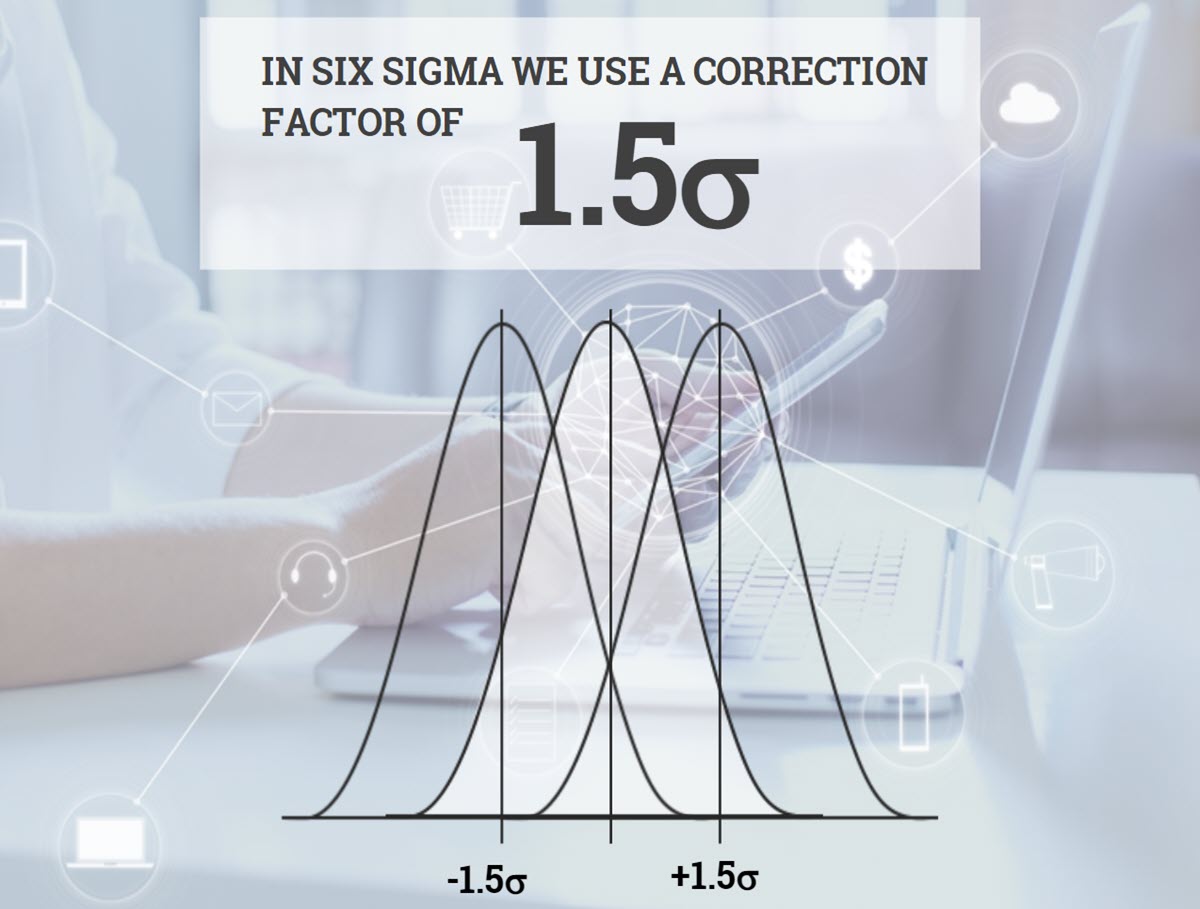

One of Six Sigma’s most hotly debated features is the infamous 1.5-sigma shift. Here’s how it works. In Six Sigma we assume that the customer’s actual experience will be worse than what we predict during capability analysis. We add a “fudge factor” that assumes that the process will shift by approximately 1.5 standard deviations. I’ve learned through experience that the derivation of the word assume is “ass u me”. As a rule I don’t encourage using assumptions and I’d like to go on record as officially advocating that we get rid of this one.

Let me say that the assumption that the customer’s experience will be worse that the capability analysis prediction is very likely correct. A process capability analysis is, by design, rigged to produce the best possible results. We take extreme precautions to control every known source of variation. We set up the process with experts. We perform a rigorous measurement systems analysis and use freshly calibrated gauges and test equipment. We limit our source materials to a single, qualified supplier. We plot the data on control charts and remove special causes of variation as they appear.

When we’re done we have a process that’s as close to perfect as we can make it. Will things go downhill from there? Of course! By precisely 1.5-sigma? Probably not. In a Six Sigma organization the process should improve over time. Should we estimate the amount of the improvement and factor that into our long-term forecasts. Maybe. Maybe not. The value added by forecasting the future is questionable at best. And what is the 1.5-sigma shift if not a forecast adjustment?

The originators of Six Sigma took a stab at “guestimating” the amount of deterioration in performance between the process and the customer. I tip my hat to them; it was worth a try. But after 30+ years of use the 1.5-sigma shift still distracts people from more valuable contributions of Six Sigma. The DMAIC framework, for example. Another is the leader-driven project selection approach that I’ve seen used to make improvements thousands of times. By removing this red herring maybe we can focus on what’s truly important.

What are your thoughts? I’m truly interested. Please comment below.

21 responses to “It’s Time To Ditch the 1.5-Sigma Shift”

Yes I agree we need to see the big picture of DMAIC and benefits of LSS, To be honest process always drifting in long term, yes we can debate on how much!

In my opinion 1.5 sigma shift is considered as we can’t predict a process capability in the longer term. That’s why long term sigma= Short term Sigma – 1.5.

I agree with your assessment. I believe short term process capability measured at the beginning of a project are not necessarily a good indicator of long term capability. I know processes shift with time. But explaining this in terms of a 1.5 sigma shift either results in perplexed, blank stares, long explanations and defense of the concept.

When teaching Six Sigma, I speak of the idea, but not the details. There is always a curious soul in the class who wanted to dive in to the details. I tell them I would be happy to after class to tell all. In a bar. With a beer.

Clever, Griff. Free beer! I need to remember this trick.

In your article with Quality Digest https://www.qualitydigest.com/may01/html/sixsigma.html you wrote “There is a saying among engineers and scientists: All models are wrong, but some models are useful. The traditional normal model, for example, is certainly wrong, but it’s often still useful. The question, then, is whether the 1.5 sigma adjustment creates a model that’s more useful than the traditional model. I believe it does. While all models simplify reality, the traditional model oversimplifies reality. It makes things look much better to us than they look to our customers.”

So, I think we can all agree that the 1.5 shift as a model is wrong, but are you now concluding that it is no longer useful?

Correct. I might even say it’s harmful in the sense that it creates much confusion and sheds little light on things. Furthermore, I haven’t seen studies showing if it results in more accurate forecasts. After 30 years, there should be evidence of the value of this arbitrary “correction factor.”

In the past, I have proposed the 1.5 shift useful as a benchmarking tool, when comparing apples to oranges, (like is the baggage process at the airport compared to the pizza deliveries, but in really this turned out to be an academic exercise to try and explain the value of a Sigma Quality Level.

Forecasting as you know quite well, has also advanced significantly in statistics with much of the complexity built into data science modeling, Artificial Intelligence and machine learning adjusting the models as the incoming data changes and evolves.

Kudos for igniting an honest reflection of the 1.5 shift debate.

FYI… I also shared your post on the International Standard for Lean Six Sigma (ISLSS) page on LinkedIn should you want to check in and comment. https://www.linkedin.com/posts/islss_its-time-to-ditch-the-15-sigma-shift-activity-6906248279101636608-9phURespectfully Steven Bonacorsi

You write “A process capability analysis is, by design, rigged to produce the best possible results.” I can honestly say I’ve never “rigged” any capability analysis. A lot of capability studies have ended up with assignable causes discovered, enabling valuable learning and improvement. (You know what an unstable process means for the interpretation of the Cp and Cpk numbers.)

If the analysis reveals a capable process, yes it is best case but it is also an honest case. The study should do its best to include the sources of variation that will routinely be “there”. Not always possible, I’d agree, but the monitoring of future results using control charts should assure that assignable causes are made visible asap. Per these learnings the “capability” of the process can be re-evaluated.

Does performance tend to degrade over time? This is a fair bet in my experience. Who, or what, performs at its best day in and day out? All this means is that the process is performing below its capability, and for this last statement to be meaningful the capability analysis should be a fair one, and not rigged.

I would like to see the 1.5-sigma shift binned, and also the short-term and long-term capability phrases. Why not just stick to capability and performance: We have the indexes that reflect this – C for capability and P for performance, i.e. Cp/Cpk/Pp/Ppk. While I always prefer graphs over index values, when one knows how to interpret these four index values you know pretty quickly how the process has been doing.Thanks for your thoughtful comment, Scott. I’m not saying the PCA is dishonest. You are confounding that term with “unfair”; I didn’t intend to be so literal. My point is that during a PCA by design only a subset of what will happen to the process long-term is studied. If nothing else the PCA is restricted to a finite time interval, which means that other things will happen to the process in time.

You also say that by using the index values (Cp/Cpk/Pp/Ppk) you know pretty quickly how the process has been doing. Exactly. Has been doing, in the past. Let’s not use something like the 1.5-sigma shift to pretend that we can accurately see the future by playing with these indices. This can be something we study separately, perhaps in magazine and journal papers. But in my opinion, it should no longer be embedded in the Six Sigma body of knowledge.

Yes, of course, 1.5 sigma shifts have no meaning whatsoever and miss the point. The point is are we operating predictably? Six Sigma attempted to redefine or add things to Dr. Shewhart’s original theory. This is a trap many have fallen into going back to Pearson in 1935. Dr. Shewhart proved time and time again his theory worked in practice and many of us have had the same experience. Six Sigma has put us (quality folk) backward for decades.

It’s past time to go back and study Shewhart.

“One purpose in considering the various definitions of quality is merely to show that in any case, the measure of quality is a quantity which may take on different numerical values. In other words, the measure of quality, no matter what the definition of quality may be, is a variable.”

Shewhart, W. A. (1931). Economic control of quality of manufactured product. Van Nostrand.

I think Six Sigma added a management framework within which improvement is pursued.

Not really, Dr. Shewhart provided all the framework we need in his last book, in just three steps. He supercharged the scientific method with his charts at each step.

“The three steps constitute a dynamic scientific process of acquiring knowledge.”

Shewhart, Walter A. (Walter Andrew), 1891-1967. (1939). Statistical method from the viewpoint of quality control. Washington :The Graduate School, The Department of Agriculture,

DMAIC is confusing and unnecessary.

“In The Music Man the con-man Prof. Harold Hill sells band instruments and uniforms and then tells the kids that they can play music if they will “just think about the notes and then play them.” In many ways this “think system” is similar to what you are asked to do with the DMAIC approach to quality improvement. In the define phase of DMAIC you and your fellow band members are told to think about your process inputs and identify those inputs that have the greatest impact on the process outcomes. (As if R &D has not already done this.) To this end the tools offered in this “think system” are cause-and-effect diagrams, failure mode effect analysis, and designed experiments.” Donald Wheeler, The “think system” for improvement, first, you guess what the problem might be…., May 2018.

The “Think System” for Improvement

Thanks for the reply.

Allan,

I disagree with your comment of “think”. Of course, I “think” because I/we have a brain and that is what it is there for amongst many other things. When I approach a DMAIC project I follow the path of physics/geometry and ask, “How is the process supposed to work?” and “How is it actually working?” I “think” through this process so that my brain makes logical conclusions based on two simple questions and a path. There is no “put on the uniform and it will play in this process” but it does involve turning on the brain and thinking. Teams I work with easily and quickly come to root cause and process improvement and often drive defects to zero.

If we ban the term 1.5 sigma shift are we also abandoning the term six sigma? As we all know the percent of product outside the six sigma band is based on a 4.5 sigma shift. Is a six sigma process now a 4.5 sigma process. Really the underlying idea is to produce product well within the limits that define product usability, whether these limits are specification limits or not.

“Really the underlying idea is to produce product well within the limits that define product usability, whether these limits are specification limits or not.”

I agree with this. I don’t think we should abandon the term Six Sigma though. It’s more a brand than a mathematical concept at this point.

Hi Tom,

As a statistician, I’m not certain that I entirely agree. Where I would strongly agree is that “as a six sigma organization”, our processes and systems should routinely improve through our strategies and subsequent actions — hence also our product and service performance should also improve. That said, left “on their own”, most processes / systems tend toward entropy or degradation, rather than self-organizing improvement and their is natural drift in essentially any process.

That said, “improvement” is assessed via many metrics, commonly reduction of variation (as opposed to variety) and hence improve in its consistency. Reduction in variation = reduction in standard deviation. That implies that the “drift” is reduced in absolute amount, but not of necessity in terms of the “number of standard deviation” associated with that drift. Of course, in such circumstances, we should also be able to progress toward whatever level (minimum, target, or maximum) is “ideal”.

So … I am mixed in my perspective.

Best,

RickWell done, Rick. Very clearly stated.

I don’t think ditching is the right thing to do. Educating is needed more than anything else. Most of the people working in SS environments have no clue what these two terms exactly mean. And companies still using Cp and Cpk are even more confused. What is not so useful to you might be useful for someone else, especially if they know how to use it properly for their unique needs.

Working at a global leader in Six Sigma, I have used both LT and ST capability analyses, and found them both to be very useful. Depends a lot on how you use SS, and what you are trying to do with CA. For a quick snapshot of how well a process is performing we would do a ST study of 5 samples. This was never used for DMAIC projects where we always collected LT data for CA capturing all sources of variation, and the question of shift did not come up. It is never perfect, but much better than anything else I have used. Similarly, capability for a SS program can also be estimated by rolling up the Zlt numbers (without estimating from Zst). Having a good understanding of these two is really what is needed. Simply using DMAIC doesn’t not make you a SS program or company.

As far as I can remember, the 1.5 sigma shift was based on actual data and not myth. It can be estimated for your own unique business and used accordingly. You cannot do a LT study every time it is required, as quite often it is simply impossible to do so within a limited time span. You don’t have to use Zst if you don’t want to, but for a quick snapshot of how well your process is performing it works well, or can be made to work even better if you can study and then customize the amount of shift for your own unique situation.

As more data becomes available and better analysis software and tools become available, taking samples is not even necessary, as all the data is being captured and we don’t have to estimate the Zlt or Zst for ppm. We can get the actual ppm and then convert it to a Z number if needed.

The 1.5 Sigma shift was meant to be a big picture adjustment at the program level (I.e the department is at 4.5 sigma). It was meant to boost morale and encourage continued improvement, so I’m for keeping it in that capacity.

I agree with Tom, the discussion about the 1.5 sigma shift is a distraction. We should focus our attention and resources on quickly catching process shifts of all sizes.

2.5 standard deviations: Point Outside the Limits test

1.5 to 2.5 standard deviations: Run Beyond 2s test

1.0 to 1.5 standard deviations: Run Beyond 1s test

0.5 to 1.0 standard deviations: Run About the Central Line testI recall from your SSBB training years ago your statement about the awesome power of Shewhart’s Control Charts and I haven’t looked back since. Incredible, I can see a process shift of even 0.5 standard deviations from across the room and take immediate action! Thank you, Tom.

Quite right, Tom. Though we do talk about the 1.5 sigma shift in our training, we are careful to qualify it exactly as you state it here. It’s an average as observed by Motorola during the early days of Six Sigma. How likely is it that any given process will be exactly on the average? Very unlikely, as you say.

Leave a Reply